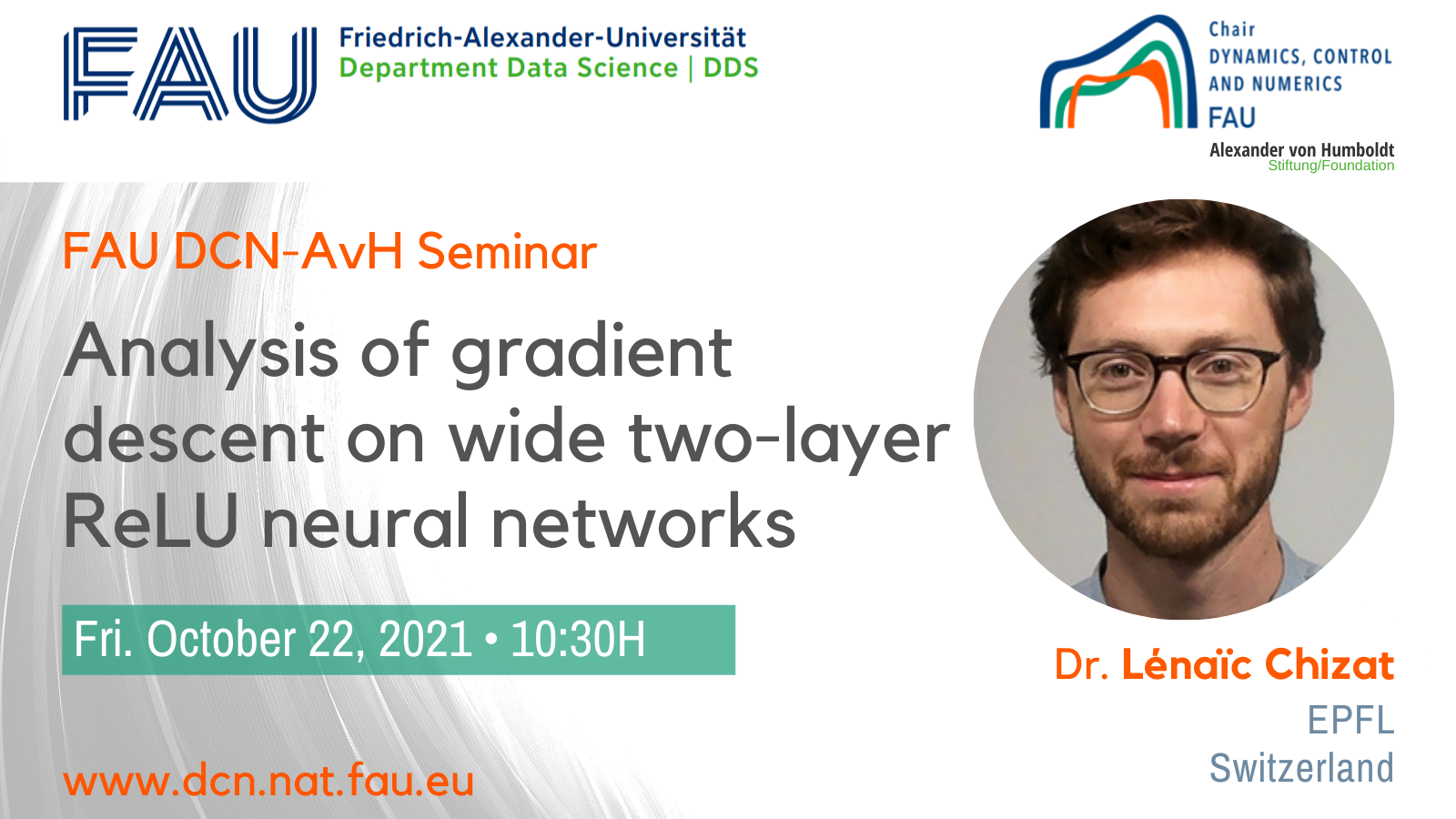

Analysis of gradient descent on wide two-layer ReLU neural networks

Speaker: Dr. Lénaïc Chizat

Affiliation: EPFL, École Polytechnique Fédérale de Lausanne (Switzerland)

Organized by: FAU DCN-AvH, Chair for Dynamics, Control and Numerics – Alexander von Humboldt Professorship at FAU Erlangen-Nürnberg (Germany)

Zoom meeting link

Meeting ID: 615 4539 3381 | PIN: 304949

Abstract. In this talk, we propose an analysis of gradient descent on wide two-layer ReLU neural networks that leads to sharp characterizations of the learned predictor. The main idea is to study the training dynamics when the width of the hidden layer goes to infinity, which is a Wasserstein gradient flow. While this dynamics evolves on a non-convex landscape, we show that for appropriate initializations, its limit, when it exists, is a global minimizer. We also study the implicit regularization of this algorithm when the objective is the unregularized logistic loss, which leads to a max-margin classifier in a certain functional space. We finally discuss what these results tell us about the generalization performance, and in particular how these models compare to kernel methods.