Large-time asymptotics in Deep Learning

This Friday October 23rd Borjan Geshkovski PhD student at CMC Deusto on the DyCon ERC Project from UAM, will give a talk at “Seminario de estadística” organized by the UAM – Universidad Autónoma de Madrid via Teams about:

Large-time asymptotics in Deep Learning

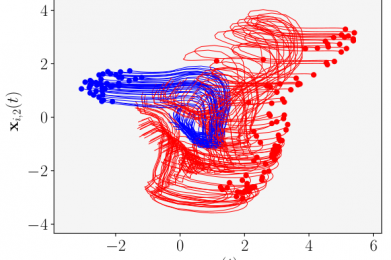

Abstract. It is by now well-known that practical deep supervised learning may roughly be cast as an optimal control problem for a specific discrete-time, nonlinear dynamical system called an artificial neural network. In this talk, we consider the continuous-time formulation of the deep supervised learning problem. We will present, using an analytical approach, this problem’s behavior when the final time horizon increases, a fact that can be interpreted as increasing the number of layers in the neural network setting. We show qualitative and quantitative estimates of the convergence to the zero training error regime depending on the functional to be minimised.

Join this session via Teams

Like this?

You might be interested in the “Math & Research” post by Borjan: