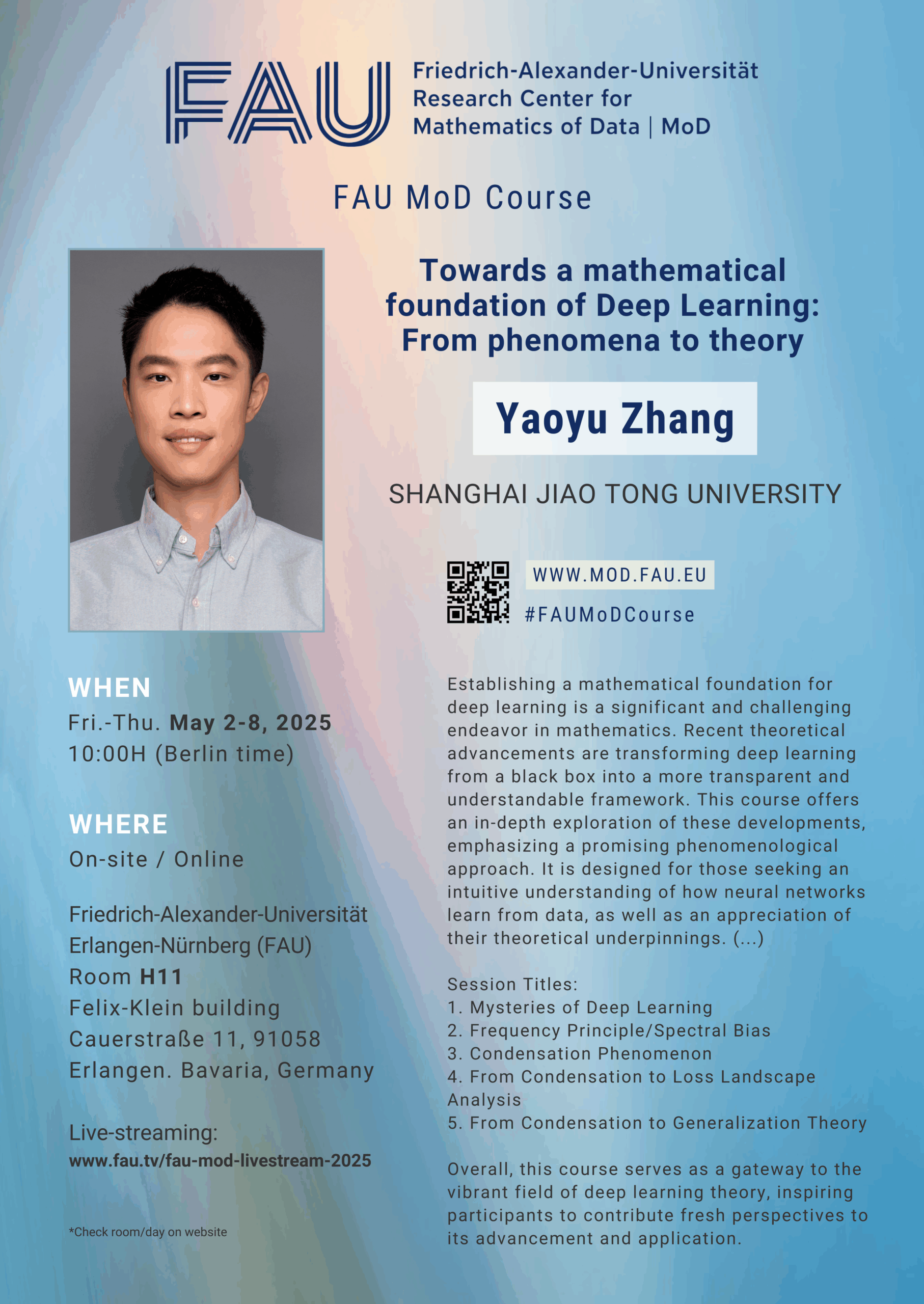

FAU MoD Course: Towards a Mathematical Foundation of Deep Learning: From Phenomena to Theory

Date: Fri. – Thu. May 2 – 8, 2025

Event: FAU MoD Course

Organizer: FAU MoD, Research Center for Mathematics of Data at FAU, Friedrich-Alexander-Universität Erlangen-Nürnberg

FAU MoD Course: Towards a Mathematical Foundation of Deep Learning: From Phenomena to Theory

Speaker: Prof. Dr. Yaoyu Zhang

Affiliation: Institute of Natural Sciences & School of Mathematical Sciences, Shanghai Jiao Tong University

Abstract. Establishing a mathematical foundation for deep learning is a significant and challenging endeavor in mathematics. Recent theoretical advancements are transforming deep learning from a black box into a more transparent and understandable framework. This course offers an in-depth exploration of these developments, emphasizing a promising phenomenological approach. It is designed for those seeking an intuitive understanding of how neural networks learn from data, as well as an appreciation of their theoretical underpinnings.

We begin by introducing deep learning and its intriguing mysteries, setting the stage for deeper theoretical investigation. Key phenomena discussed include the frequency principle (or spectral bias), where neural networks tend to prioritize learning low-frequency components of data, and the condensation phenomenon, in which neurons within a layer cluster together, effectively reducing the number of independent neurons. Delving into these phenomena, we present detailed theoretical results and explore their implications for the capabilities and limitations of deep learning models.

Furthermore, we demonstrate how the study of condensation motivates novel analyses of the loss landscape and advances in generalization theory. By examining the loss landscape underlying condensation, we uncover a nonconvex hierarchical embedding structure of critical points that influences neural network convergence during training. To explain the generalization advantages of condensation, we introduce the concept of optimistic sample size estimates, highlighting the potential of heavily parameterized neural networks to achieve excellent sample efficiency.

Overall, this course serves as a gateway to the vibrant field of deep learning theory, inspiring participants to contribute fresh perspectives to its advancement and application.

Session Titles:

1. Mysteries of Deep Learning

2. Frequency Principle/Spectral Bias

3. Condensation Phenomenon

4. From Condensation to Loss Landscape Analysis

5. From Condensation to Generalization Theory

BIO.- Yaoyu Zhang is an associate professor at the Institute of Natural Sciences and the School of Mathematical Sciences, Shanghai Jiao Tong University. He earned his Bachelor’s degree in Physics in 2012, and his Ph.D. in Mathematics in 2016 from Shanghai Jiao Tong University. From 2016 to 2020, he conducted postdoctoral research at New York University Abu Dhabi & Courant Institute, as well as the Institute for Advanced Study in Princeton. His research focuses on the theoretical foundation of deep learning.

AUDIENCE

This is a hybrid event (on-site/online) designed for advanced master’s students and beyond, focusing on in-depth discussions and technical insights.

Open to: Public, Students, Postdocs, Professors, Faculty, Alumni and the scientific community all around the world.

WHEN

Fri. – Thu. May 2 – 8, 2025

Time-table:

Fri. May 2: H11 (14:00H – 16:00H)

Mon. May 5: H11 (10:00H – 12:00H)

Tue. May 6: H11 (14:00H – 16:00H)

Wed. May 7: H16 (12:00H – 14:00H)

Thu. May 8: H16 (12:00H – 14:00H)

WHERE

On-site / Online

[On-site] FAU, Friedrich-Alexander-Universität Erlangen-NürnbergCauerstraße 7/9, 91058 Erlangen, Bavaria (Germany)

H11. Felix-Klein building. Mathematik/Informatik

H16. EEI-Gebäude, Technische Fakultät

How to get to Erlangen? [Online] https://www.fau.tv/fau-mod-livestream-2025

Short-link to share this event: https://go.fau.de/1bd–

You might like:

• FAU MoD Courses and Workshops

• FAU MoD Lectures

• Upcoming events

_

Don’t miss out our last news and connect with us!