Master Thesis: “Gradient Descent on Wide Neural Networks and Federated Learning”

Author: Gisele Stephanie Otiobo

Supervisor: Prof. Dr. DhC. Enrique Zuazua

Date: May 20, 2023

Deep neural networks are very complex and difficult to understand due to their intricate structure and a vast number of parameters. The relationship between parameters and predictors is entangled by the sequential composition of nonlinear functions and the non-convex objective presents further complications. Despite being too complex for classical learning theory, neural networks can successfully generalize in practice. This project focuses on a neural network with a homogeneous activation function and a wide hidden layer.

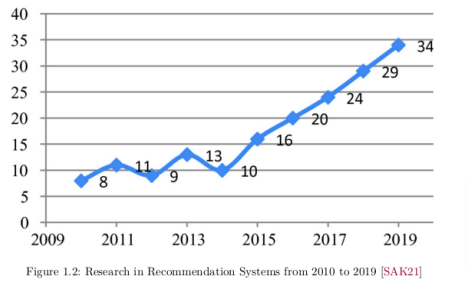

We explored qualitative convergence, particularly global convergence guarantees that can be derived. The aim is to gain a good understanding of the associated gradient flow and connect it with the new paradigm of Federated Learning. Rather than examining finite-width neural networks, the study focuses on networks in the infinite width limit, where a distribution over weights and biases characterizes predictors. This enables a strong connection between these overparametrized networks and gradient flow. As we observed, numerical study shows that the more the number of neurons increases the more flow is observed and the better the convergence of the model. This technique would therefore revolutionize the way models are now trained and those also for Federated Learning approaches in order to make local training more efficient for a more efficient global model to be trained by collaboration.

The study also analyzes the Federated Learning technique on the MNIST dataset and examined both Independent and Identically Distributed (IID) and non-Independent and Identically Distributed (non-IID) data. We have therefore understood the functioning of the federated learning approach from the theoretical concept to its experimentation for a neural network with finite width, during this experimentation we obtained 94% performance for two models and 98% for four other models.

See all details at:

Master Thesis: “Gradient Descent on Wide Neural Networks and Federated Learning”, by Gisele Stephanie Otiobo (May 20, 2023)

Supervisor: Prof. Dr. DhC. Enrique Zuazua

Figure. This is a visual representation of a GF on a two-layer neural network with m = 10,

using the ReLU activation function.