Stochastic Synchronization of Chaotic Neurons

Real biological neurons can show chaotic dynamics when excited by the certain external input current. The behavior of these neurons is characterized by instability and, as a result, limited predictability in time. Mathematically, a system is chaotic if it has a long-term aperiodic behavior, and if it exhibits sensitive dependence on initial conditions on a bounded invariant set.

Consequently, for a chaotic system, trajectories starting arbitrarily closed to each other diverge exponentially with time and quickly become uncorrelated. It follows that two identical chaotic systems cannot synchronize. This means that they cannot produce identical chaotic signals unless they are initialized at exactly the same point, which is in general physically or biologically impossible. Thus, synchronization of chaotic systems seems to be rather surprising at first sight because one may intuitively expect that the sensitive dependence on initial conditions would lead to an immediate breakdown of any synchronization of coupled chaotic systems, which led to the belief that chaos is uncontrollable and thus unusable.

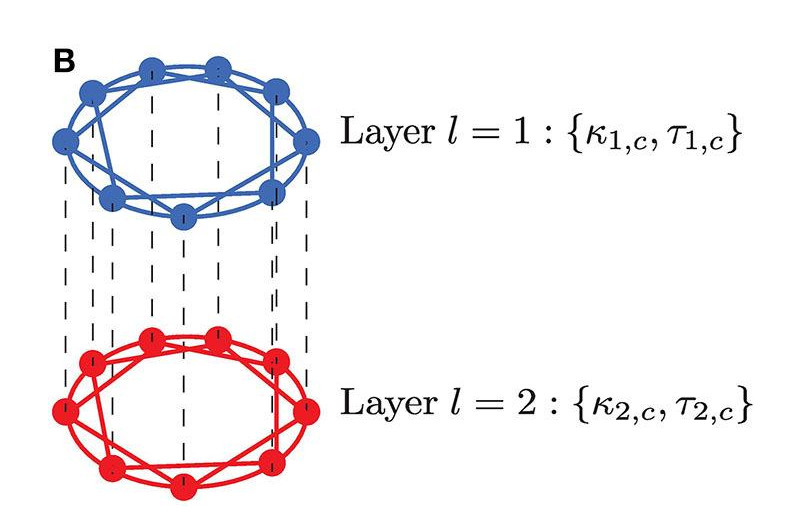

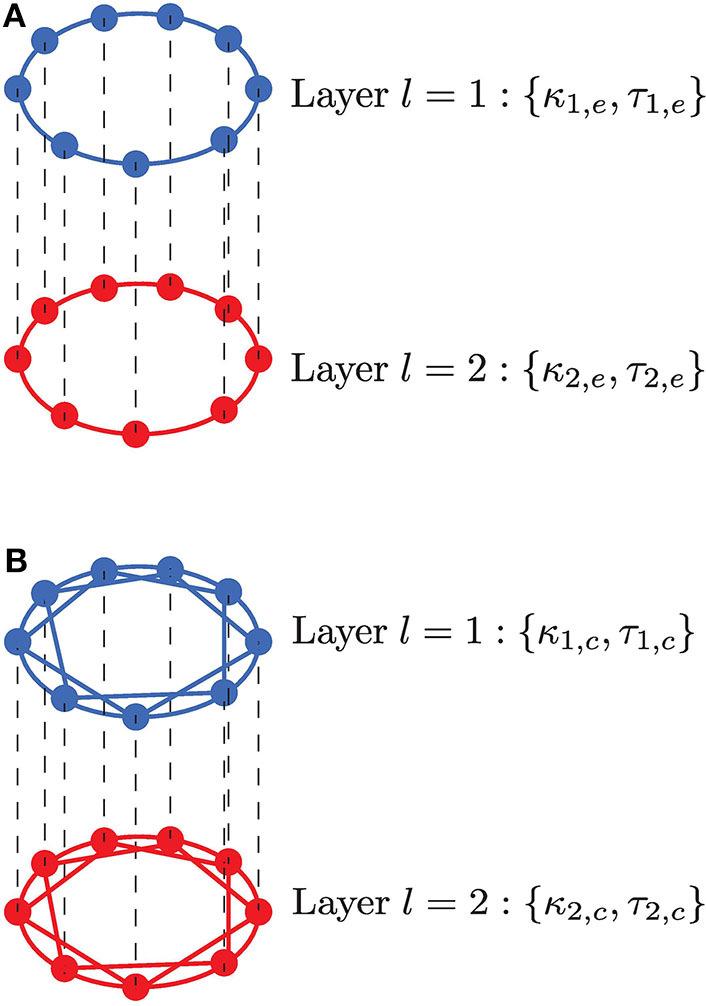

One objective in this area of research is to develop an integrated approach to study the relationship between both the neural network topology and the synchronism level including a deformability parameter in the network model. Secondly, I work on the development of new stability methods (other than the classical master stability function and the Krasovskii-Lyapunov stability theory) and control scheme for the chaotic synchronization of neurons.

Here, the main idea is the introduction of an adaptive control law to the neuron model to achieve the control objective despite parametric uncertainties, time delays, and especially noise which is ubiquitous in neural systems. We recently established a new method for checking the stability of the synchronization in neural networks based on the generalized Hamiltonian approach. The approach is based on the fact the variation of Hamilton’s energy associated with the error dynamical system of the original neural network is related to the divergence of the vector field responsible for the volume contraction of the phase space. The non-zero variation of this Hamilton will lead to the growth of the error dynamical system and hence to the instability of the synchronization manifold when a parameter is varied. While a zero Hamilton energy at a particular parameter value of the error system of the neural networks corresponds to a stable synchronization manifold.

My current goal in this area of my research is to establish the stochastic generalized Hamiltonian approach for the stability of the synchronization in a stochastic neural network.

The control –stabilization and/or suppression schemes– of synchronization behaviors in neural systems should find applications in the control of chronic neurodegenerative diseases like Epilepsy and Parkinson’s diseases which are due to explosive synchronization in neural networks.

I am mainly interested in the interplay of structure and function in the stochastic and dynamical neural computations, from single cell activity up to macroscopic limits of large random neural networks. In particular, I aim at understanding how some complex structural and dynamical phenomena give rise to the powerful computational capabilities of neural networks in the presence of randomness. I am also interested in the details of the mechanisms behind some neurodegenerative diseases (Epilepsy, Alzheimer’s, and Parkinson’s diseases).

References

- Marius E. Yamakou, Chaotic synchronization of memristive neurons: Lyapunov function versus Hamilton function. Nonlinear Dynamics 101, 487-500 (2020)

- Marius E. Yamakou, E. Maeva Inack, F. M. Kakmeni Moukam, Ratcheting and energetic aspects of synchronization in coupled bursting neurons. Nonlinear Dynamics 83, 541-554 (2016)

|| Go to the Math & Research main page